Lecture 3: Disk Management and Indexes#

Gittu George, January 14 2025

Announcements#

All what you need to work on assignment 1 is available on the course website (from your lecture notes). Start early.

Todays Agenda#

HOW do humans store and search data?

HOW does Postgres store data?

HOW does Postgres search data?

WHY indexes?

WHAT are indexes?

Different kinds of indexes

Do I need an index?

Learning objectives#

You will apply knowledge of disk management and query structure to optimize complex queries.

You will understand the different types of INDEXes available in Postgres and the best-suited applications

You will be able to profile and optimize various queries to provide the fastest response.

You already have the data we will use in this lecture from worksheet 2, but if not, please load it so that you can follow with me in lecture (You can load this data to your database using this dump(worksheet2). It’s a zip file; do make sure you extract it.). I will use the testindex table from fakedata schema for demonstration.

How do humans store and search data?#

Think about how we used to store data before the era of computers? We used to store the data in books. Let’s take this children’s encyclopedia as an example.

.

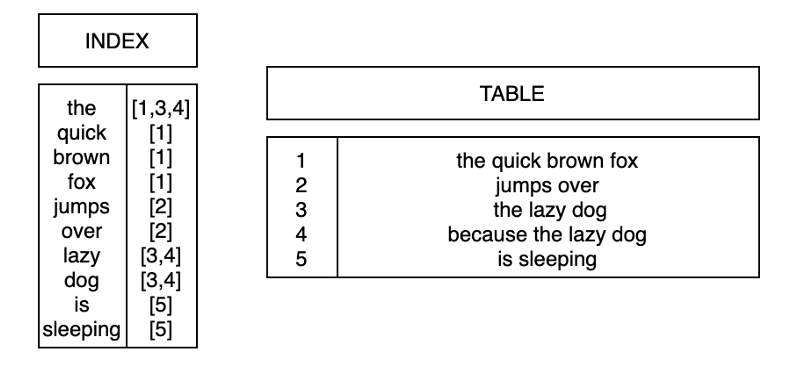

There are around 300 pages in this book, and if a kid wants to know about China, they will go from the first page until they get to the 48th page`. There might be other places too that talk about china. So the kid wants to go over all the pages to gather all the information about China.

This is how a kid would search, but we are grown up and know about indexes in a book. So if you want to read about china, you would go to the index of this book, look for China, and go to the 48th page straight away (and to other pages listed in the index). If you want to read some history about indexes, check out this article.

OKAY, now you all know how humans search for data from a book; Postgres also store and search data similarly.

How does Postgres store data?#

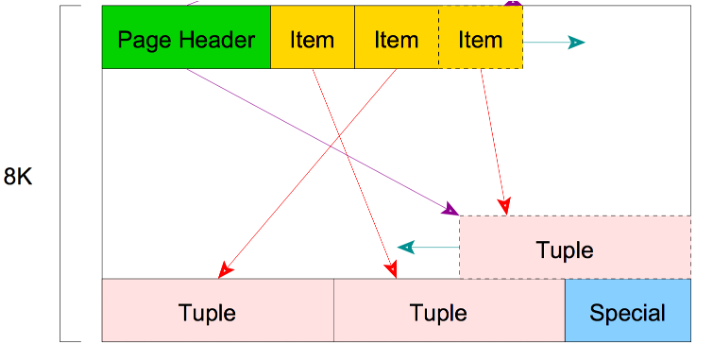

Information within a database is also stored in pages. A database page is not structured like in a book, but it’s structured like below.

.

So these pages are of 8 kB blocks; there are some meta-data as headers (green) at the front of it and item ID (yellow) that points to the tuples(rows). This ends with a special section (blue) left empty in ordinary tables, but if we have some indexes set on the tables, it will have some information to facilitate the index access methods.

So a table within a database will have a list of pages where the data is stored, and these individual pages store multiple tuples within that table.

Read more about it here.

How does Postgres search data?#

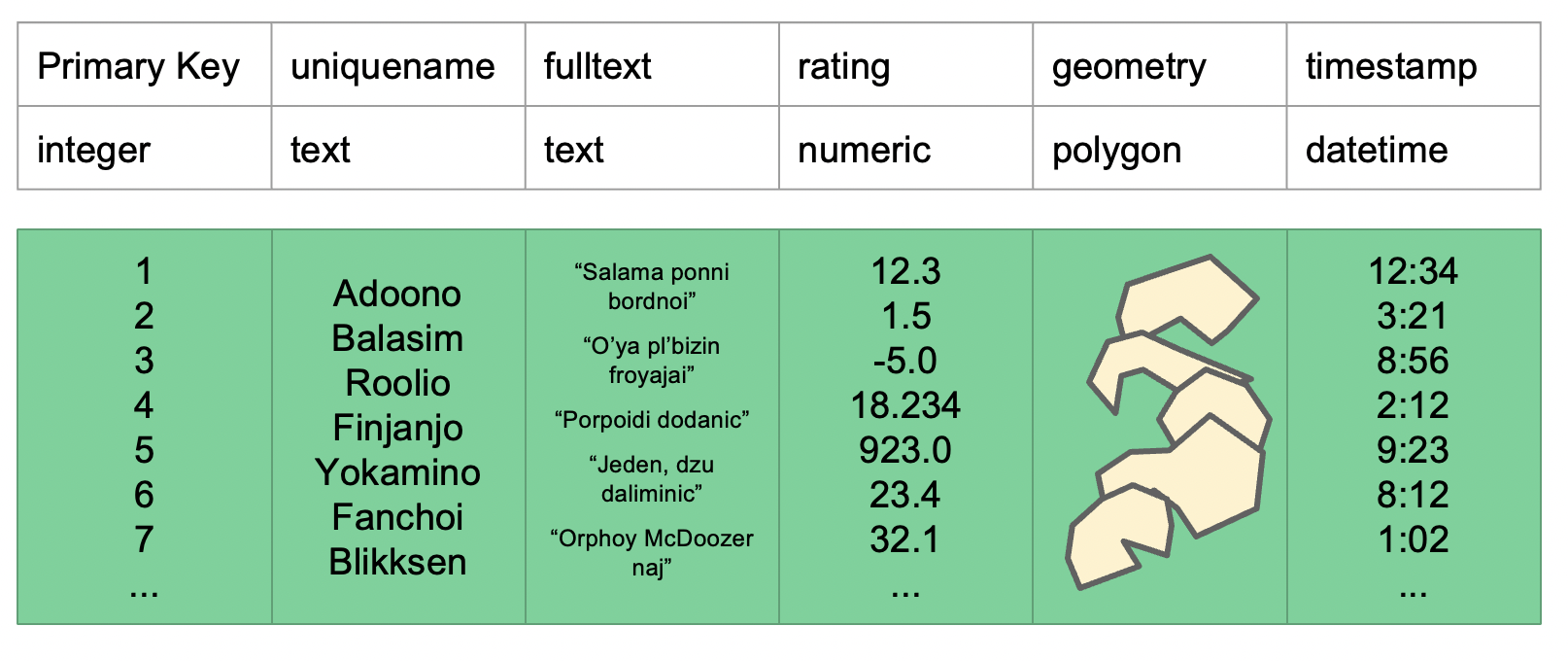

Let’s consider a complicated table.

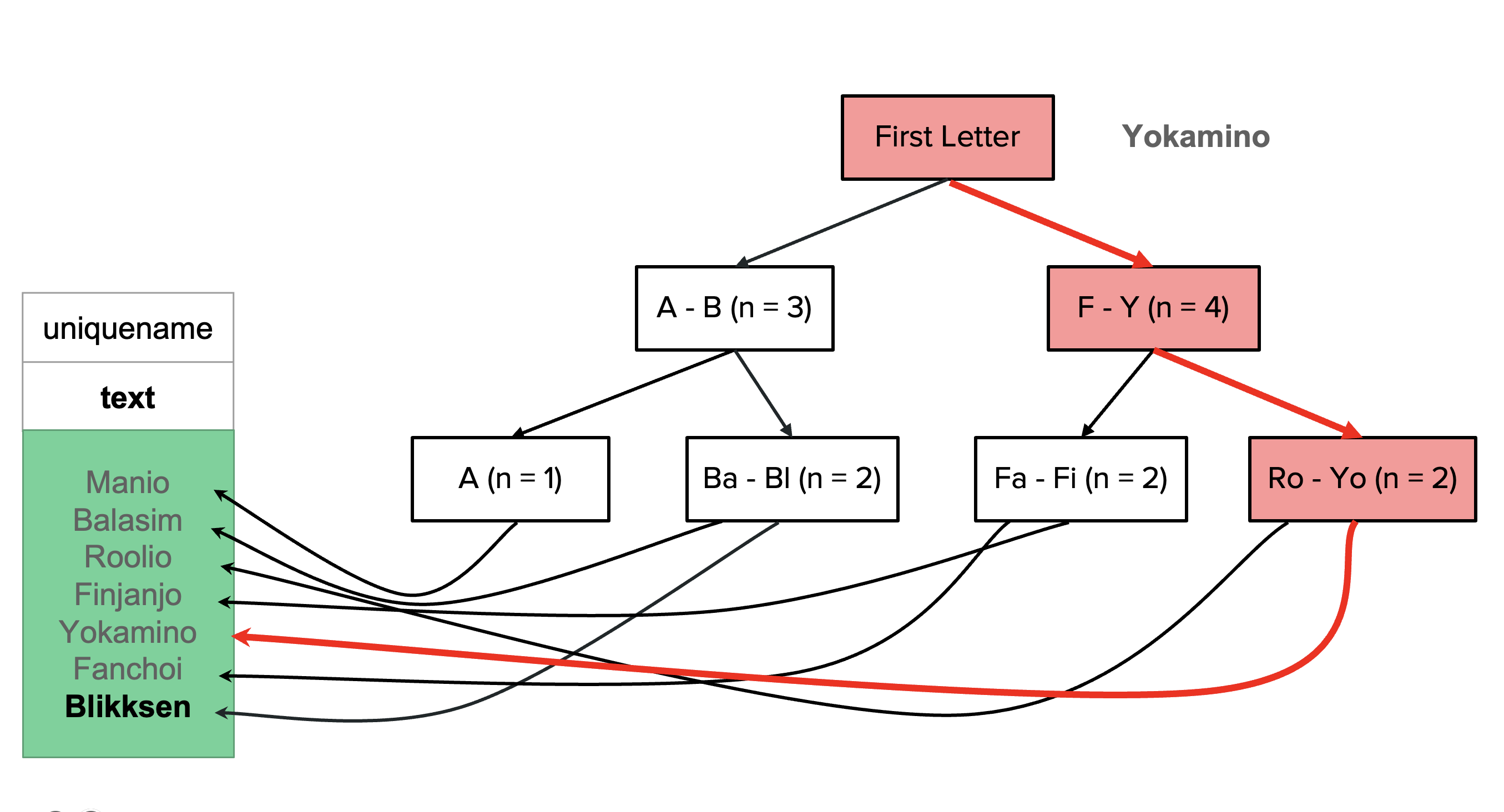

For explanation, let’s assume that the contents in this table are stored in 3 page files. Here we are interested in finding the rows with the unique name that starts with y. A database, just like a kid, needs to search through the 3 pages to find the rows that contain a name that begins with y. This is a sequential search, and this is how a database search by default. This process could be slow if we got millions of rows spread across multiple pages, as the computer needs to go through the entire pages to find the names that start with y.

Also, here in this example, let’s assume that Yokamino is on page 2, the database will find this on page 2, but it will still go to page 3 in search of other occurrences of names that start with y. WHY? Because it doesn’t know that there is only a single occurrence of a name that starts with y.

Let’s look at how Postgres search for data using EXPLAIN. We will do this on table fakedata.testindex,

import pandas as pd

import matplotlib.pyplot as plt

import psycopg2

import json

import urllib.parse

%load_ext sql

%config SqlMagic.displaylimit = 20

%config SqlMagic.autolimit = 30

with open('credentials.json') as f:

login = json.load(f)

username = login['user']

password = urllib.parse.quote(login['password'])

host = login['host']

port = login['port']

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 2

1 import pandas as pd

----> 2 import matplotlib.pyplot as plt

3 import psycopg2

4 import json

ModuleNotFoundError: No module named 'matplotlib'

%sql postgresql://{username}:{password}@{host}:{port}/postgres

%%sql

DROP INDEX if exists fakedata.hash_testindex_index;

DROP INDEX if exists fakedata.pgweb_idx;

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

Done.

Done.

[]

Here, we do a normal search for a product name within the column productname.

%%sql

explain analyze select count(*) from fakedata.testindex where productname = 'flavor halibut';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

10 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=144373.50..144373.51 rows=1 width=8) (actual time=3496.595..3501.765 rows=1 loops=1) |

| -> Gather (cost=144373.29..144373.50 rows=2 width=8) (actual time=3493.596..3501.754 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=143373.29..143373.30 rows=1 width=8) (actual time=3486.634..3486.636 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..143373.22 rows=25 width=0) (actual time=3486.630..3486.631 rows=0 loops=3) |

| Filter: (productname = 'flavor halibut'::text) |

| Rows Removed by Filter: 3724110 |

| Planning Time: 1.072 ms |

| Execution Time: 3501.823 ms |

We are doing a pattern matching search to return all the productname that start with fla.

%%sql

EXPLAIN ANALYZE SELECT COUNT(*) FROM fakedata.testindex WHERE productname LIKE 'fla%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

10 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=144374.60..144374.61 rows=1 width=8) (actual time=984.070..987.852 rows=1 loops=1) |

| -> Gather (cost=144374.39..144374.60 rows=2 width=8) (actual time=976.739..987.840 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=143374.39..143374.40 rows=1 width=8) (actual time=975.448..975.449 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..143373.22 rows=465 width=0) (actual time=1.186..974.307 rows=9271 loops=3) |

| Filter: (productname ~~ 'fla%'::text) |

| Rows Removed by Filter: 3714839 |

| Planning Time: 0.092 ms |

| Execution Time: 987.889 ms |

Note

A query is turned into an internal execution plan breaking the query into specific elements that are re-ordered and optimized. Read more about EXPLAIN here. This blog also explains it well.

These are computers, so it’s faster than humans to go through all the pages, but it’s inefficient. Won’t it be cool to tell the database that the data you are looking for is only on certain pages, so database don’t want to go through all these pages !!! This is WHY we want indexes. We will see if indexing can speed up the previous query.

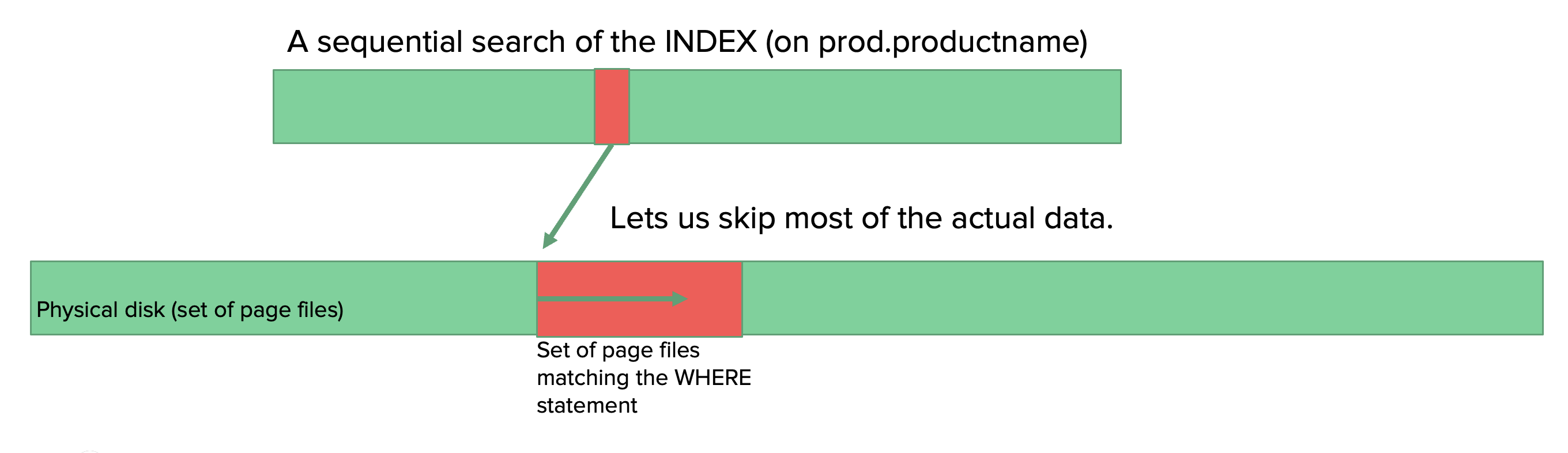

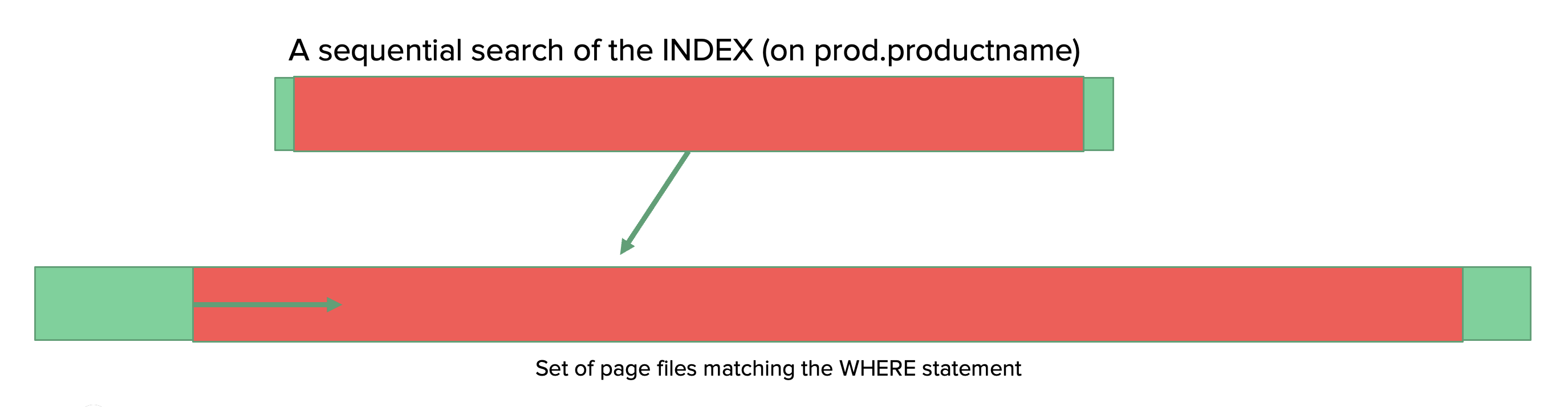

WHAT are indexes?#

Indexes make a map of each of these rows effectively as where they are put into the page files so we can do a sequential scan of an index to find the pointer to page/pages this information is stored. So the database can go straight to those pages and skip the rest of the pages.

Index gets applied to a column in a table. As we were querying on the column productname, let’s now apply an index to the column productname in the database and see how the query execution plan changes. Will it improve the speed? Let’s see..

%%sql

CREATE INDEX if not exists default_testindex_index ON fakedata.testindex (productname varchar_pattern_ops);

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

Done.

[]

%%sql

explain analyze select count(*) from fakedata.testindex where productname = 'flavor halibut';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

6 rows affected.

| QUERY PLAN |

|---|

| Aggregate (cost=9.65..9.66 rows=1 width=8) (actual time=0.028..0.029 rows=1 loops=1) |

| -> Index Only Scan using default_testindex_index on testindex (cost=0.43..9.50 rows=61 width=0) (actual time=0.025..0.025 rows=0 loops=1) |

| Index Cond: (productname = 'flavor halibut'::text) |

| Heap Fetches: 0 |

| Planning Time: 0.295 ms |

| Execution Time: 0.061 ms |

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE 'fla%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

7 rows affected.

| QUERY PLAN |

|---|

| Aggregate (cost=11.25..11.26 rows=1 width=8) (actual time=4.835..4.836 rows=1 loops=1) |

| -> Index Only Scan using default_testindex_index on testindex (cost=0.43..8.46 rows=1117 width=0) (actual time=0.067..3.582 rows=27814 loops=1) |

| Index Cond: ((productname ~>=~ 'fla'::text) AND (productname ~<~ 'flb'::text)) |

| Filter: (productname ~~ 'fla%'::text) |

| Heap Fetches: 0 |

| Planning Time: 0.285 ms |

| Execution Time: 4.879 ms |

Hurrayyy!!!! It increased the speed, and you see that your query planner used the index to speed up queries.

But keep in mind if the selection criteria are too broad, or the INDEX is too imprecise, the query planner will skip it.

Let’s try that out.

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE '%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

9 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=156010.12..156010.13 rows=1 width=8) (actual time=1180.875..1182.041 rows=1 loops=1) |

| -> Gather (cost=156009.90..156010.11 rows=2 width=8) (actual time=1177.104..1182.029 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=155009.90..155009.91 rows=1 width=8) (actual time=1171.861..1171.862 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..143373.22 rows=4654672 width=0) (actual time=0.034..877.504 rows=3724110 loops=3) |

| Filter: (productname ~~ '%'::text) |

| Planning Time: 0.076 ms |

| Execution Time: 1182.077 ms |

It’s good that database engines are smart enough to identify if it’s better to look at an index or perform a sequential scan. Here in this example, the database engine understands the query is too broad. But, ultimately, it’s going to search through entire rows, so it’s better to perform a sequential scan rather than look up at the index.

As the index is making a map in the disk, it takes up disk storage. Let’s see how much space this index is taking up

%%sql

SELECT pg_size_pretty (pg_indexes_size('fakedata.testindex'));

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

1 rows affected.

| pg_size_pretty |

|---|

| 83 MB |

Now we realize indexes are great! So let’s try some different queries.

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE '%fla%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

10 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=144374.60..144374.61 rows=1 width=8) (actual time=971.599..972.735 rows=1 loops=1) |

| -> Gather (cost=144374.39..144374.60 rows=2 width=8) (actual time=971.590..972.728 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=143374.39..143374.40 rows=1 width=8) (actual time=964.961..964.962 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..143373.22 rows=465 width=0) (actual time=0.165..962.624 rows=22257 loops=3) |

| Filter: (productname ~~ '%fla%'::text) |

| Rows Removed by Filter: 3701853 |

| Planning Time: 0.082 ms |

| Execution Time: 972.769 ms |

This was not too broad search; we were trying to return all the elements that contains fla. But why didn’t it speed things up? So we can’t always go with default indexing, and it might not be helpful in all cases. That’s why it’s important to know about different indexes and a general understanding of how it works. This will help you to choose indexes properly in various situations.

Different kinds of indexes#

In this section, we will go through various kinds of indexes and syntax for creating them;

B-Tree (binary search tree - btree)

Hash

GIST (Generalized Search Tree)

GIN (Generalized Inverted Tree)

BRIN (Block Range Index)

Each has its own set of operations, tied to Postgres functions/operators. For example, you can read about indexes here.

The general syntax for making an index:

CREATE INDEX indexname ON schema.tablename USING method (columnname opclass);

B-Tree#

B-Tree is the default index, and you can see we used this previously for column productname.

General Syntax: Here the Operator_Classes is optional. Read more on it here

CREATE INDEX if not exists index_name ON schema.tablename (columnname Operator_Classes);

Example:

CREATE INDEX if not exists default_testindex_index ON fakedata.testindex (productname varchar_pattern_ops);

Note

If you are using B-Tree indexing on a numeric column that you don’t want to specify ‘Operator_Classes’. Operator class varchar_pattern_ops is used for character column.

You will do it on a numeric column in worksheet 3.

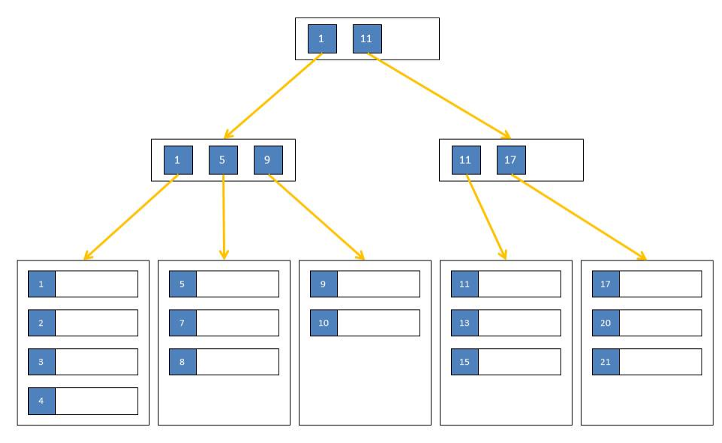

Values are balanced across nodes to produce a minimum-spanning tree.

Each node splits values, each “leaf” points to addresses.

All nodes are equidistant from the root.

Btree is not just about dealing with numbers but also handling text columns; that’s the reason why I gave varchar_pattern_ops as opclass. So in this example, finds the field that we are looking for in just 3 steps, rather than scanning 7 rows.

Hash#

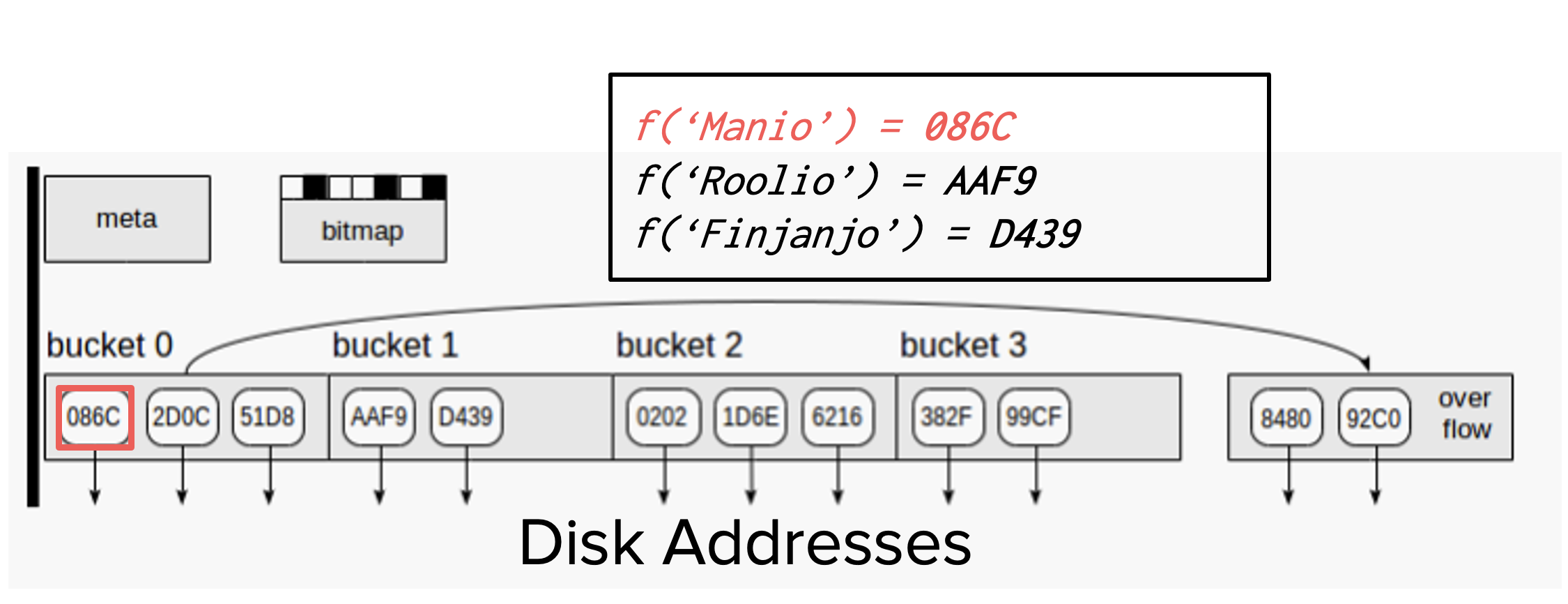

Cell values are hashed (encoded) form and mapped to address “buckets”

Hash -> bucket mappings -> disk address

The hash function tries to balance the number of buckets & number of addresses within a bucket

Hash only supports EQUALITY

Let’s create a hash index on column productname and execute queries that we ran before

%%sql

DROP INDEX fakedata.default_testindex_index;

CREATE INDEX if not exists hash_testindex_index ON fakedata.testindex USING hash(productname);

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

Done.

Done.

[]

%%sql

explain analyze select count(*) from fakedata.testindex where productname = 'flavor halibut';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

7 rows affected.

| QUERY PLAN |

|---|

| Aggregate (cost=244.49..244.50 rows=1 width=8) (actual time=0.014..0.015 rows=1 loops=1) |

| -> Bitmap Heap Scan on testindex (cost=4.47..244.34 rows=61 width=0) (actual time=0.011..0.011 rows=0 loops=1) |

| Recheck Cond: (productname = 'flavor halibut'::text) |

| -> Bitmap Index Scan on hash_testindex_index (cost=0.00..4.46 rows=61 width=0) (actual time=0.009..0.010 rows=0 loops=1) |

| Index Cond: (productname = 'flavor halibut'::text) |

| Planning Time: 0.131 ms |

| Execution Time: 0.041 ms |

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE 'fla%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

10 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=144374.60..144374.61 rows=1 width=8) (actual time=613.415..617.124 rows=1 loops=1) |

| -> Gather (cost=144374.39..144374.60 rows=2 width=8) (actual time=613.014..617.114 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=143374.39..143374.40 rows=1 width=8) (actual time=605.984..605.984 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..143373.22 rows=465 width=0) (actual time=0.164..602.864 rows=9271 loops=3) |

| Filter: (productname ~~ 'fla%'::text) |

| Rows Removed by Filter: 3714839 |

| Planning Time: 0.081 ms |

| Execution Time: 617.160 ms |

%%sql

SELECT pg_size_pretty (pg_indexes_size('fakedata.testindex'));

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

1 rows affected.

| pg_size_pretty |

|---|

| 381 MB |

Why do you think hash takes up more space here?

When do you think it’s best to you hash indexing?

GIN#

Before talking about GIN indexes, let me give you a little bit of background on a full-text search. Full-text search is usually used if you have a column with sentences and you need to query rows based on the match for a particular string in that sentence. For example, say we want to get rows with new in the sentence column.

row number |

sentence |

|---|---|

1 |

this column has to do some thing with new year, also has to do something with row |

2 |

this column has to do some thing with colors |

3 |

new year celebrated on 1st Jan |

4 |

new year celebration was very great this year |

5 |

This is about cars released this year |

We usually query it this way, this definitely will return our result, but it takes time.

%%sql

SELECT * FROM fakedata.testindex

WHERE productname LIKE '%new%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

27510 rows affected.

| nameid | productid | productname | price |

|---|---|---|---|

| 57480 | 176593 | Charles news | $75.85 |

| 57504 | 40949 | step newsprint | $20.51 |

| 57839 | 105207 | newsstand cucumber | $36.26 |

| 57956 | 74890 | Swiss newsstand | $89.07 |

| 58003 | 62195 | woman news | $92.51 |

| 58108 | 170925 | newsprint great-grandmother | $40.24 |

| 58144 | 150276 | minister news | $56.78 |

| 58148 | 214134 | titanium newsstand | $84.03 |

| 58253 | 39191 | whale newsstand | $62.70 |

| 58348 | 263120 | storm news | $12.30 |

| 58470 | 170925 | newsprint great-grandmother | $40.24 |

| 58506 | 62195 | woman news | $92.51 |

| 58535 | 214134 | titanium newsstand | $84.03 |

| 58539 | 84146 | oil news | $91.43 |

| 58540 | 105207 | newsstand cucumber | $36.26 |

| 58723 | 173294 | lung news | $90.10 |

| 58805 | 49151 | comics news | $50.00 |

| 58837 | 150276 | minister news | $56.78 |

| 58869 | 168728 | boat newsprint | $7.31 |

| 58907 | 105207 | newsstand cucumber | $36.26 |

That’s why we go for full-text search; I found this blog to help understand this.

SELECT * FROM fakedata.testindex

WHERE to_tsvector('english', sentence) @@ to_tsquery('english','new');'

Here we are converting the type of column sentence to tsvector

Here the first row in sentence column this column has to do something with the new year also has to do something with row will be represented like this internally

'also':11 'column':2 'do':5,14 'has':3,12 'new':9 'row':17 'some':6 'something':15 'thing':7 'this':1 'to':4,13 'with':8,16 'year':10

to_tsquery is how you query, and here we query for new using to_tsquery('english','new').

Postgres does a pretty good job with the full-text search, but if we want to speed up the search, we go for GIN indexes. The column needs to be of tsvector type for a full-text search.

Indexing many values to the same row

Inverse of B-tree - one value to many rows e.g., “quick”, or “brown” or “the” all point to row 1

Most commonly used for

full-text searching.

Let’s try these on our tables; Following is how much time it will take without indexing.

%%sql

EXPLAIN ANALYZE SELECT count(*) FROM fakedata.testindex WHERE to_tsvector('english', productname) @@ to_tsquery('english','flavor');

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

10 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=1308216.11..1308216.12 rows=1 width=8) (actual time=17749.324..17751.105 rows=1 loops=1) |

| -> Gather (cost=1308215.89..1308216.10 rows=2 width=8) (actual time=17745.419..17751.095 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=1307215.89..1307215.90 rows=1 width=8) (actual time=17732.049..17732.050 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..1307157.70 rows=23276 width=0) (actual time=8.589..17730.227 rows=3158 loops=3) |

| Filter: (to_tsvector('english'::regconfig, productname) @@ '''flavor'''::tsquery) |

| Rows Removed by Filter: 3720952 |

| Planning Time: 24.513 ms |

| Execution Time: 17751.155 ms |

Let’s see how much time it is going to take with indexing. Before that we want to create index for the column.

%%time

%%sql

DROP INDEX if exists fakedata.hash_testindex_index;

DROP INDEX if exists fakedata.pgweb_idx;

CREATE INDEX if not exists pgweb_idx ON fakedata.testindex USING GIN (to_tsvector('english', productname));

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

Done.

Done.

Done.

CPU times: user 15.6 ms, sys: 5.02 ms, total: 20.6 ms

Wall time: 41.7 s

Here is how much time it is taking with indexing.

%%sql

EXPLAIN ANALYZE SELECT count(*) FROM fakedata.testindex WHERE to_tsvector('english', productname) @@ to_tsquery('english','flavor');

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

12 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=87136.43..87136.44 rows=1 width=8) (actual time=10.970..12.487 rows=1 loops=1) |

| -> Gather (cost=87136.21..87136.42 rows=2 width=8) (actual time=10.636..12.479 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=86136.21..86136.22 rows=1 width=8) (actual time=5.044..5.044 rows=1 loops=3) |

| -> Parallel Bitmap Heap Scan on testindex (cost=384.34..86078.02 rows=23276 width=0) (actual time=1.918..4.796 rows=3158 loops=3) |

| Recheck Cond: (to_tsvector('english'::regconfig, productname) @@ '''flavor'''::tsquery) |

| Heap Blocks: exact=213 |

| -> Bitmap Index Scan on pgweb_idx (cost=0.00..370.37 rows=55862 width=0) (actual time=3.798..3.799 rows=9474 loops=1) |

| Index Cond: (to_tsvector('english'::regconfig, productname) @@ '''flavor'''::tsquery) |

| Planning Time: 0.191 ms |

| Execution Time: 12.529 ms |

Wooohoooo!!! A great improvement!! By creating a materialized view (We will see more about views in next class) with a computed tsvector column, we can make searches even faster, since it will not be necessary to redo the to_tsvector calls to verify index matches.

%%sql

CREATE MATERIALIZED VIEW if not exists fakedata.testindex_materialized as select *,to_tsvector('english', productname) as productname_ts

FROM fakedata.testindex;

CREATE INDEX if not exists pgweb_idx_mat ON fakedata.testindex_materialized USING GIN (productname_ts);

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

Done.

Done.

[]

%%sql

EXPLAIN ANALYZE SELECT count(*) FROM fakedata.testindex_materialized WHERE productname_ts @@ to_tsquery('english','flavor');

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

8 rows affected.

| QUERY PLAN |

|---|

| Aggregate (cost=17079.68..17079.69 rows=1 width=8) (actual time=11.253..11.255 rows=1 loops=1) |

| -> Bitmap Heap Scan on testindex_materialized (cost=51.46..17067.12 rows=5027 width=0) (actual time=7.318..10.764 rows=9474 loops=1) |

| Recheck Cond: (productname_ts @@ '''flavor'''::tsquery) |

| Heap Blocks: exact=9148 |

| -> Bitmap Index Scan on pgweb_idx_mat (cost=0.00..50.20 rows=5027 width=0) (actual time=5.839..5.839 rows=9474 loops=1) |

| Index Cond: (productname_ts @@ '''flavor'''::tsquery) |

| Planning Time: 2.109 ms |

| Execution Time: 11.290 ms |

This indexing does speed up things if we want to search for a particular word from a column, like flavor. But if we want to search for some pattern within a sentence, then this index won’t help. EG the query what we trying from beginning

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE '%fla%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

10 rows affected.

| QUERY PLAN |

|---|

| Finalize Aggregate (cost=144374.60..144374.61 rows=1 width=8) (actual time=1130.358..1135.903 rows=1 loops=1) |

| -> Gather (cost=144374.39..144374.60 rows=2 width=8) (actual time=1130.349..1135.896 rows=3 loops=1) |

| Workers Planned: 2 |

| Workers Launched: 2 |

| -> Partial Aggregate (cost=143374.39..143374.40 rows=1 width=8) (actual time=1125.655..1125.656 rows=1 loops=3) |

| -> Parallel Seq Scan on testindex (cost=0.00..143373.22 rows=465 width=0) (actual time=0.105..1120.757 rows=22257 loops=3) |

| Filter: (productname ~~ '%fla%'::text) |

| Rows Removed by Filter: 3701853 |

| Planning Time: 0.072 ms |

| Execution Time: 1135.940 ms |

Hence, we want a different flavor of gin indexing, trigram index. I found this blog to help understand this.

Trigrams Details (OPTIONAL)#

Trigrams are a special case of N-grams. The concept relies on dividing the sentence into a sequence of three consecutive letters, and this result is considered as a set. Before performing this process

Two blank spaces are added at the beginning.

One at the end.

Double ones replace single spaces.

The trigram set corresponding to “flavor halibut” looks like this:

{” f”,” h”,” fl”,” ha”,ali,avo,but,fla,hal,ibu,lav,lib,”or “,”ut “,vor}

These are considered words, and the rest remains the same as what we discussed before. To use this index add gin_trgm_ops as operator class. Let’s do it.

%%sql

CREATE EXTENSION pg_trgm;

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

(psycopg2.errors.DuplicateObject) extension "pg_trgm" already exists

[SQL: CREATE EXTENSION pg_trgm;]

(Background on this error at: https://sqlalche.me/e/14/f405)

%%sql

select show_trgm('flavor halibut');

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

1 rows affected.

| show_trgm |

|---|

| [' f', ' h', ' fl', ' ha', 'ali', 'avo', 'but', 'fla', 'hal', 'ibu', 'lav', 'lib', 'or ', 'ut ', 'vor'] |

%%sql

DROP INDEX if exists fakedata.pgweb_idx;

CREATE INDEX pgweb_idx ON fakedata.testindex USING GIN (productname gin_trgm_ops);

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

Done.

Done.

[]

Let’s see if it speeds up the query that we tried in many cases before.

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE '%fla%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

8 rows affected.

| QUERY PLAN |

|---|

| Aggregate (cost=4149.06..4149.07 rows=1 width=8) (actual time=84.509..84.511 rows=1 loops=1) |

| -> Bitmap Heap Scan on testindex (cost=72.43..4146.27 rows=1117 width=0) (actual time=17.269..81.094 rows=66772 loops=1) |

| Recheck Cond: (productname ~~ '%fla%'::text) |

| Heap Blocks: exact=46066 |

| -> Bitmap Index Scan on pgweb_idx (cost=0.00..72.15 rows=1117 width=0) (actual time=8.929..8.930 rows=66772 loops=1) |

| Index Cond: (productname ~~ '%fla%'::text) |

| Planning Time: 0.091 ms |

| Execution Time: 84.551 ms |

%%sql

EXPLAIN ANALYZE

SELECT COUNT(*) FROM fakedata.testindex

WHERE productname LIKE '%flavor%';

* postgresql://postgres:***@database-1.ccf3srhijxm7.us-east-1.rds.amazonaws.com:5432/postgres

8 rows affected.

| QUERY PLAN |

|---|

| Aggregate (cost=4340.50..4340.51 rows=1 width=8) (actual time=29.317..29.319 rows=1 loops=1) |

| -> Bitmap Heap Scan on testindex (cost=263.87..4337.70 rows=1117 width=0) (actual time=6.937..28.836 rows=9474 loops=1) |

| Recheck Cond: (productname ~~ '%flavor%'::text) |

| Heap Blocks: exact=8921 |

| -> Bitmap Index Scan on pgweb_idx (cost=0.00..263.59 rows=1117 width=0) (actual time=5.442..5.443 rows=9474 loops=1) |

| Index Cond: (productname ~~ '%flavor%'::text) |

| Planning Time: 0.084 ms |

| Execution Time: 29.356 ms |

Finally, we see this query is using indexes, and it did speed up the query.

GIST#

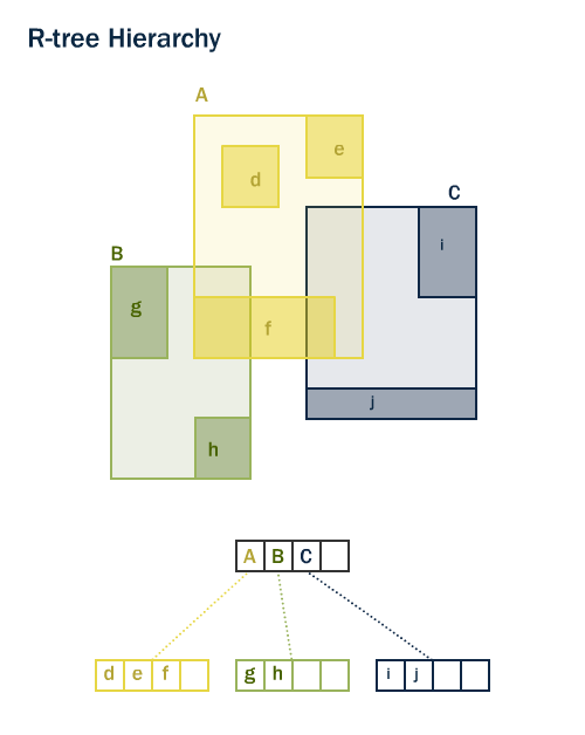

Supports many search types, including spatial and full text.

Can be extended to custom data types

Balanced tree-based method (nodes & leaves)

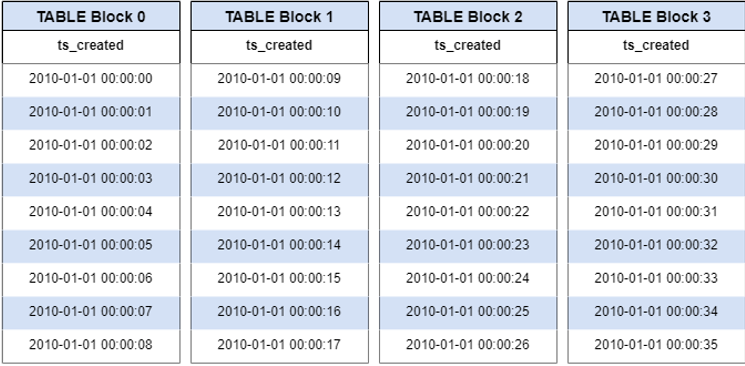

BRIN#

Indexes block statistics for ordered data

Block min & max mapped to index

Search finds block first, then finds values

Best for larger volumes of data

This is essentially how we read a book’s index.

Usage

CREATE INDEX index_name ON schema.table/view USING BRIN(columnname);

We will use this later when we go through our Twitter example (tomorrow’s lecture).

Now we have learned about indexes, can you answer these questions?

What are indexes?

Different types of indexes?

When to use indexes?

How to use an index?

Why do I need an index?

Compared to Other Services (optional)#

BigQuery, AWS RedShift

Don’t use Indexes, infrastructure searches whole “columns”

MongoDB

Single & multiparameter indexes (similar to b-tree)

spatial (similar to GIST)

text indexes (similar to GIN)

hash index

Neo4j (Graph Database)

b-tree and full text

Summary#

Indexes are important to speed up operations

Indexes are optimized to certain kinds of data

Index performance can be assessed using

EXPLAIN.Indexes can come at a cost and should be careful in selecting it.

Class activity#

Note

Check worksheet 3 in canvas for detailed instructions on activity and submission instructions.

Setting up index

Experience the performance with/without and with different types of indexes.